GPU Kernels #

In addition to Anaconda Notebook’s default environments, Anaconda provides access to remote GPU kernels powered by NVIDIA’s A10 GPUs. These kernels are ideal for complex, compute-intensive workloads that would otherwise be too time consuming to run in a default environment. You can choose to run the entire notebook on GPU or apply GPU acceleration to specific cells.

What is a GPU kernel?

Anaconda’s GPU kernel runs a GPU-enabled environment called “anaconda-01 - nvidia-a10”. This environment contains the libraries, drivers, and configurations necessary for communicating with NVIDIA GPUs, as well as the ipykernel package to enable interactive computing in Python. See below for a list of all packages included in the default environment.

When you assign a GPU kernel to a notebook (or notebook cell), you’re using the default GPU-enabled environment. For more information about kernels and environment, see Environments.

Note

At this time, Anaconda is offering free credits to select paid subscribers only. Free tier subscribers who would like to use GPU kernels can upgrade to gain access.

Assigning GPU kernels#

There are a few methods you can use to assign a GPU kernel to either an entire notebook or an individual cell:

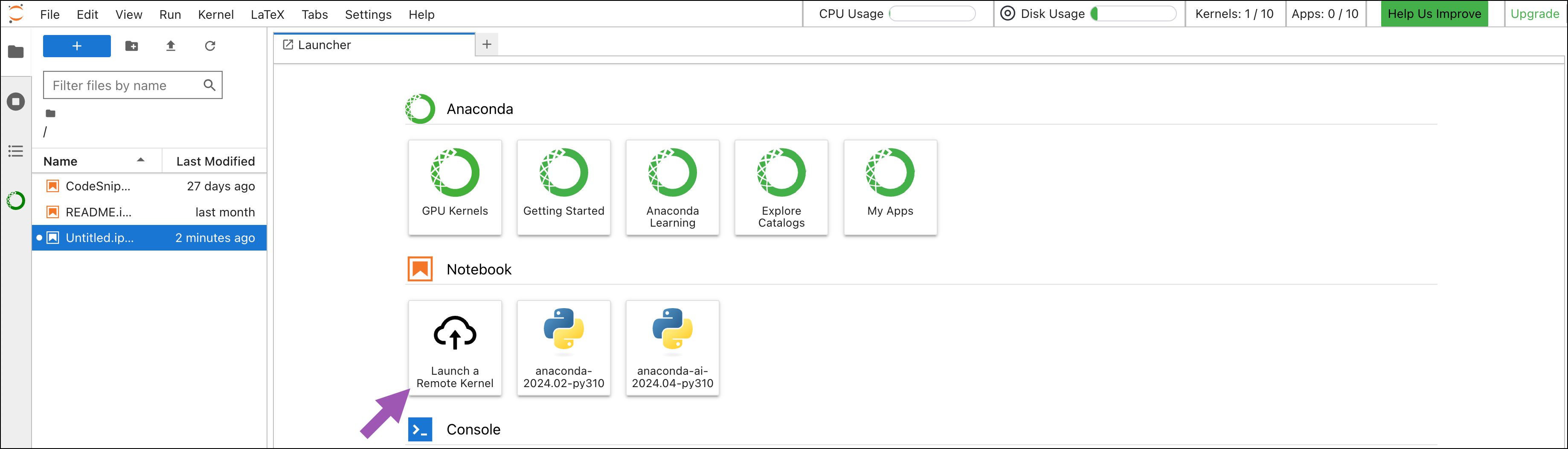

Open the Launcher.

Under Notebook, click Launch a Remote Kernel.

In the Launch a Kernel dialog, open the Environment dropdown and select a kernel.

Use the slider or type a number in the text box to reserve time for the kernel session (in minutes).

Assign a name for your kernel or use the default kernel name.

Click Launch a Kernel.

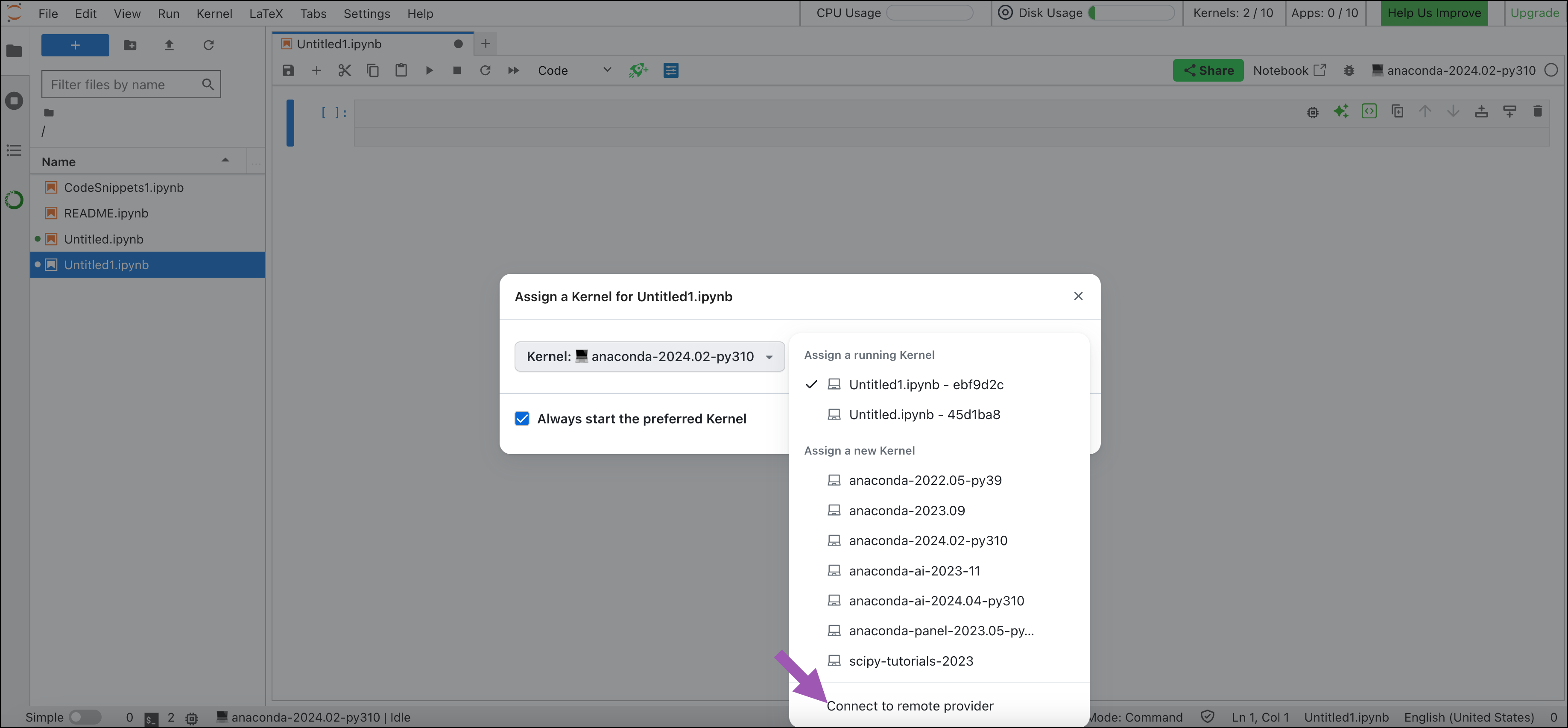

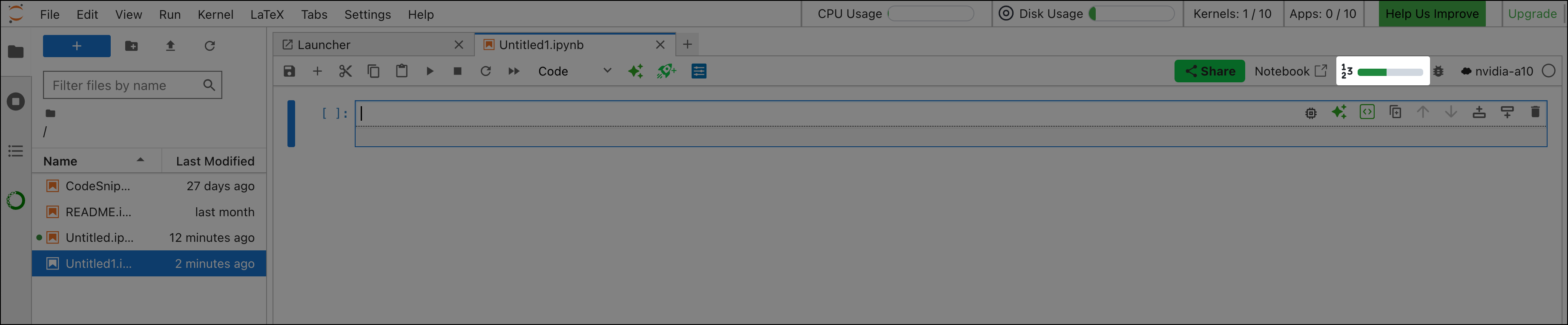

In an active notebook, click the kernel in the top right.

If you haven’t launched a remote kernel yet, select Connect to remote provider and follow the steps in the Launcher tab. Otherwise, select the remote kernel (the default kernel name is “nvidia-a10”).

In the Assign a Kernel dialog, make sure the remote kernel is selected in the dropdown.

Use the slider or type a number in the text box to reserve time for the kernel session (in minutes).

Warning

Don’t switch the Transfer variables toggle to On. Switching the toggle to On causes Notebooks to crash. This is a known issue that is currently being addressed.

Click Assign.

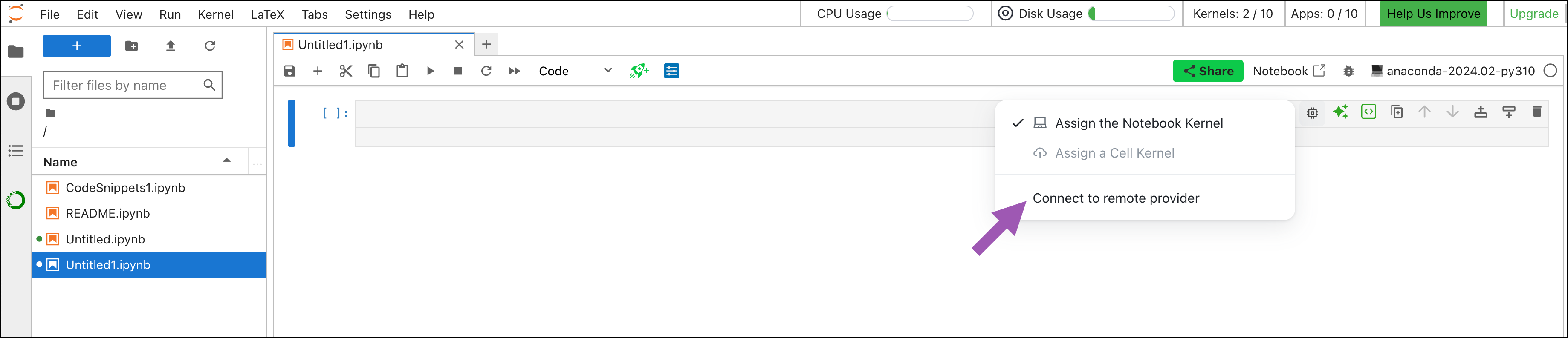

In an active cell, click Assign a specific kernel to the cell.

If you haven’t launched a remote kernel yet, select Connect to remote provider and follow the steps in the Launcher tab. Otherwise, continue to the next step.

Click Assign a Cell Kernel.

Select the Environment and Kernel name.

Click Assign from the Environment.

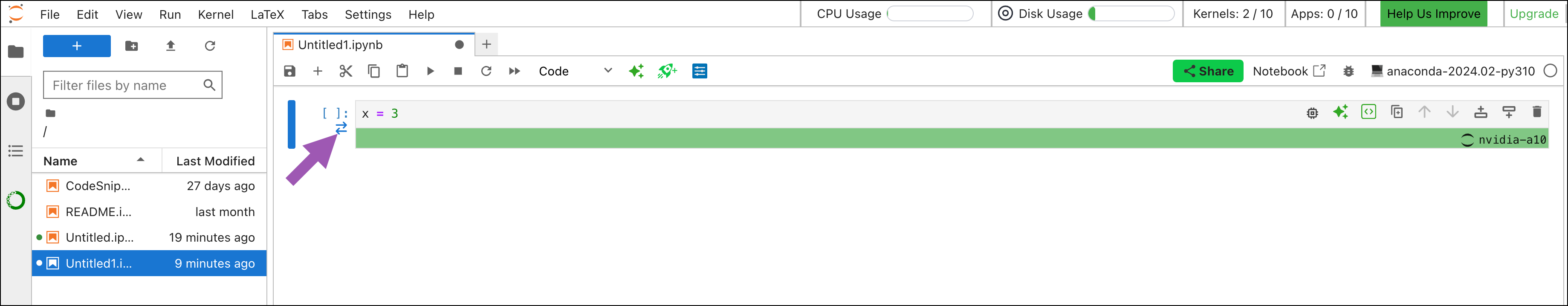

A green bar appears beneath the cell, which indicates that the cell has successfully connected to the GPU kernel.

Using GPU kernels#

Once you assign a GPU kernel to a notebook or cell, you can run your code as you normally would. You should notice a considerable increase in processing speed, especially for more complex tasks.

Anaconda’s default GPU kernel, “anaconda-01 - nvidia-a10”, is tied to a read-only GPU-enabled environment that includes the latest Anaconda, AI, and NVIDIA packages.

Packages included in anaconda-01 - nvidia-a10

Package Name |

Package Version |

|---|---|

_libgcc_mutex |

0.1 |

_openmp_mutex |

4.5 |

accelerate |

0.34.2 |

aiohappyeyeballs |

2.4.0 |

aiohttp |

3.10.5 |

aiosignal |

1.3.1 |

alembic |

1.13.1 |

altair |

5.3.0 |

annotated-types |

0.7.0 |

anyio |

4.3.0 |

aom |

3.8.2 |

archspec |

0.2.3 |

argon2-cffi |

23.1.0 |

argon2-cffi-bindings |

21.2.0 |

arrow |

1.3.0 |

asttokens |

2.4.1 |

async-lru |

2.0.4 |

async_generator |

1.1 |

attrs |

23.2.0 |

aws-c-auth |

0.7.17 |

aws-c-cal |

0.6.11 |

aws-c-common |

0.9.15 |

aws-c-compression |

0.2.18 |

aws-c-event-stream |

0.4.2 |

aws-c-http |

0.8.1 |

aws-c-io |

0.14.7 |

aws-c-mqtt |

0.10.3 |

aws-c-s3 |

0.5.7 |

aws-c-sdkutils |

0.1.15 |

aws-checksums |

0.1.18 |

aws-crt-cpp |

0.26.6 |

aws-sdk-cpp |

1.11.267 |

babel |

2.14.0 |

beautifulsoup4 |

4.12.3 |

blas |

2.122 |

blas-devel |

3.9.0 |

bleach |

6.1.0 |

blinker |

1.7.0 |

blis |

0.7.11 |

blosc |

1.21.5 |

bokeh |

3.4.1 |

boltons |

24.0.0 |

bottleneck |

1.3.8 |

brotli |

1.1.0 |

brotli-bin |

1.1.0 |

brotli-python |

1.1.0 |

brunsli |

0.1 |

bzip2 |

1.0.8 |

c-ares |

1.28.1 |

c-blosc2 |

2.14.4 |

ca-certificates |

2024.8.30 |

cached-property |

1.5.2 |

cached_property |

1.5.2 |

catalogue |

2.0.10 |

certifi |

2024.8.30 |

certipy |

0.1.3 |

cffi |

1.16.0 |

charls |

2.4.2 |

charset-normalizer |

3.3.2 |

click |

8.1.7 |

cloudpathlib |

0.19.0 |

cloudpickle |

3.0.0 |

colorama |

0.4.6 |

comm |

0.2.2 |

conda |

24.3.0 |

conda-libmamba-solver |

24.1.0 |

conda-package-handling |

2.2.0 |

conda-package-streaming |

0.9.0 |

confection |

0.1.5 |

configurable-http-proxy |

4.6.1 |

contourpy |

1.2.1 |

cryptography |

42.0.5 |

cuda |

12.2.2 |

cuda-cccl |

12.2.140 |

cuda-command-line-tools |

12.2.2 |

cuda-compiler |

12.2.2 |

cuda-cudart |

12.2.140 |

cuda-cudart-dev |

12.2.140 |

cuda-cudart-static |

12.2.140 |

cuda-cuobjdump |

12.2.140 |

cuda-cupti |

12.2.142 |

cuda-cupti-static |

12.2.142 |

cuda-cuxxfilt |

12.2.140 |

cuda-demo-suite |

12.2.140 |

cuda-documentation |

12.2.140 |

cuda-driver-dev |

12.2.140 |

cuda-gdb |

12.2.140 |

cuda-libraries |

12.2.2 |

cuda-libraries-dev |

12.2.2 |

cuda-libraries-static |

12.2.2 |

cuda-nsight |

12.2.144 |

cuda-nsight-compute |

12.2.2 |

cuda-nvcc |

12.2.140 |

cuda-nvdisasm |

12.2.140 |

cuda-nvml-dev |

12.2.140 |

cuda-nvprof |

12.2.142 |

cuda-nvprune |

12.2.140 |

cuda-nvrtc |

12.2.140 |

cuda-nvrtc-dev |

12.2.140 |

cuda-nvrtc-static |

12.2.140 |

cuda-nvtx |

12.2.140 |

cuda-nvvp |

12.2.142 |

cuda-opencl |

12.2.140 |

cuda-opencl-dev |

12.2.140 |

cuda-profiler-api |

12.2.140 |

cuda-runtime |

12.2.2 |

cuda-sanitizer-api |

12.2.140 |

cuda-toolkit |

12.2.2 |

cuda-tools |

12.2.2 |

cuda-visual-tools |

12.2.2 |

cycler |

0.12.1 |

cymem |

2.0.8 |

cython |

3.0.10 |

cytoolz |

0.12.3 |

dask |

2024.4.2 |

dask-core |

2024.4.2 |

dask-expr |

1.0.12 |

datalayer-kernels |

0.0.7 |

dav1d |

1.2.1 |

debugpy |

1.8.1 |

decorator |

5.1.1 |

defusedxml |

0.7.1 |

deprecation |

2.1.0 |

dill |

0.3.8 |

distributed |

2024.4.2 |

distro |

1.9.0 |

entrypoints |

0.4 |

et_xmlfile |

1.1.0 |

exceptiongroup |

1.2.0 |

executing |

2.0.1 |

fastai |

2.7.17 |

fastcore |

1.7.8 |

fastdownload |

0.0.7 |

fastprogress |

1.0.3 |

filelock |

3.16.1 |

fmt |

10.2.1 |

fonttools |

4.51.0 |

fqdn |

1.5.1 |

freetype |

2.12.1 |

frozenlist |

1.4.1 |

fsspec |

2024.3.1 |

gds-tools |

1.9.1.3 |

gflags |

2.2.2 |

giflib |

5.2.2 |

gitdb |

4.0.11 |

gitpython |

3.1.43 |

glog |

0.7.0 |

gmp |

6.3.0 |

gmpy2 |

2.1.2 |

greenlet |

3.0.3 |

h11 |

0.14.0 |

h2 |

4.1.0 |

h5py |

3.11.0 |

hdf5 |

1.14.3 |

hpack |

4.0.0 |

httpcore |

1.0.5 |

httpx |

0.27.0 |

huggingface-hub |

0.25.0 |

hyperframe |

6.0.1 |

icu |

73.2 |

idna |

3.7 |

imagecodecs |

2024.1.1 |

imageio |

2.34.0 |

importlib-metadata |

7.1.0 |

importlib_metadata |

7.1.0 |

importlib_resources |

6.4.0 |

intake |

2.0.7 |

ipykernel |

6.29.3 |

ipympl |

0.9.4 |

ipython |

8.22.2 |

ipython_genutils |

0.2.0 |

ipywidgets |

8.0.4 |

isoduration |

20.11.0 |

jedi |

0.19.1 |

jinja2 |

3.1.3 |

joblib |

1.4.0 |

json5 |

0.9.25 |

jsonpatch |

1.33 |

jsonpointer |

2.4 |

jsonschema |

4.21.1 |

jsonschema-specifications |

2023.12.1 |

jsonschema-with-format-nongpl |

4.21.1 |

jupyter-lsp |

2.2.5 |

jupyter-packaging |

0.12.0 |

jupyter-server |

2.12.0.dev0 |

jupyter-server-mathjax |

0.2.6 |

jupyter-server-proxy |

3.2.1 |

jupyter_client |

8.6.1 |

jupyter_core |

5.7.2 |

jupyter_events |

0.10.0 |

jupyter_server_terminals |

0.5.3 |

jupyter_telemetry |

0.1.0 |

jupyterhub |

4.1.5 |

jupyterhub-base |

4.1.5 |

jupyterlab |

4.1.0b0 |

jupyterlab-git |

0.50.0 |

jupyterlab_pygments |

0.3.0 |

jupyterlab_server |

2.26.0 |

jupyterlab_widgets |

3.0.10 |

jxrlib |

1.1 |

keyutils |

1.6.1 |

kiwisolver |

1.4.5 |

krb5 |

1.21.2 |

langchain |

0.3.0 |

langchain-core |

0.3.1 |

langchain-text-splitters |

0.3.0 |

langcodes |

3.4.0 |

langsmith |

0.1.122 |

language-data |

1.2.0 |

lazy_loader |

0.4 |

lcms2 |

2.16 |

ld_impl_linux-64 |

2.4 |

lerc |

4.0.0 |

libabseil |

20240116.1 |

libaec |

1.1.3 |

libarchive |

3.7.2 |

libarrow |

15.0.2 |

libarrow-acero |

15.0.2 |

libarrow-dataset |

15.0.2 |

libarrow-flight |

15.0.2 |

libarrow-flight-sql |

15.0.2 |

libarrow-gandiva |

15.0.2 |

libarrow-substrait |

15.0.2 |

libavif16 |

1.0.4 |

libblas |

3.9.0 |

libbrotlicommon |

1.1.0 |

libbrotlidec |

1.1.0 |

libbrotlienc |

1.1.0 |

libcblas |

3.9.0 |

libcrc32c |

1.1.2 |

libcublas |

12.4.5.8 |

libcublas-dev |

12.4.5.8 |

libcublas-static |

12.4.5.8 |

libcufft |

11.2.1.3 |

libcufft-dev |

11.2.1.3 |

libcufft-static |

11.2.1.3 |

libcufile |

1.9.1.3 |

libcufile-dev |

1.9.1.3 |

libcufile-static |

1.9.1.3 |

libcurand |

10.3.5.147 |

libcurand-dev |

10.3.5.147 |

libcurand-static |

10.3.5.147 |

libcurl |

8.7.1 |

libcusolver |

11.6.1.9 |

libcusolver-dev |

11.6.1.9 |

libcusolver-static |

11.6.1.9 |

libcusparse |

12.3.1.170 |

libcusparse-dev |

12.3.1.170 |

libcusparse-static |

12.3.1.170 |

libdeflate |

1.2 |

libedit |

3.1.20191231 |

libev |

4.33 |

libevent |

2.1.12 |

libexpat |

2.6.2 |

libffi |

3.4.2 |

libgcc |

14.1.0 |

libgcc-ng |

14.1.0 |

libgfortran-ng |

13.2.0 |

libgfortran5 |

13.2.0 |

libgomp |

14.1.0 |

libgoogle-cloud |

2.22.0 |

libgoogle-cloud-storage |

2.22.0 |

libgrpc |

1.62.2 |

libhwy |

1.1.0 |

libiconv |

1.17 |

libjpeg-turbo |

3.0.0 |

libjxl |

0.10.2 |

liblapack |

3.9.0 |

liblapacke |

3.9.0 |

libllvm14 |

14.0.6 |

libllvm16 |

16.0.6 |

libmamba |

1.5.8 |

libmambapy |

1.5.8 |

libnghttp2 |

1.58.0 |

libnl |

3.9.0 |

libnpp |

12.2.5.30 |

libnpp-dev |

12.2.5.30 |

libnpp-static |

12.2.5.30 |

libnsl |

2.0.1 |

libnvjitlink |

12.4.127 |

libnvjitlink-dev |

12.4.127 |

libnvjpeg |

12.3.1.117 |

libnvjpeg-dev |

12.3.1.117 |

libnvjpeg-static |

12.3.1.117 |

libopenblas |

0.3.27 |

libparquet |

15.0.2 |

libpng |

1.6.43 |

libprotobuf |

4.25.3 |

libre2-11 |

2023.09.01 |

libsodium |

1.0.18 |

libsolv |

0.7.28 |

libsqlite |

3.45.3 |

libssh2 |

1.11.0 |

libstdcxx-ng |

13.2.0 |

libthrift |

0.19.0 |

libtiff |

4.6.0 |

libutf8proc |

2.8.0 |

libuuid |

2.38.1 |

libuv |

1.48.0 |

libwebp-base |

1.4.0 |

libxcb |

1.15 |

libxcrypt |

4.4.36 |

libxml2 |

2.12.6 |

libzlib |

1.2.13 |

libzopfli |

1.0.3 |

llvm-openmp |

18.1.3 |

llvmlite |

0.42.0 |

locket |

1.0.0 |

lz4 |

4.3.3 |

lz4-c |

1.9.4 |

lzo |

2.1 |

mako |

1.3.3 |

mamba |

1.5.8 |

marisa-trie |

1.2.0 |

markdown-it-py |

3.0.0 |

markupsafe |

2.1.5 |

matplotlib-base |

3.8.4 |

matplotlib-inline |

0.1.7 |

mdurl |

0.1.2 |

menuinst |

2.0.2 |

mistune |

3.0.2 |

mpc |

1.3.1 |

mpfr |

4.2.1 |

mpmath |

1.3.0 |

msgpack-python |

1.0.7 |

multidict |

6.1.0 |

munkres |

1.1.4 |

murmurhash |

1.0.10 |

nbclassic |

1.0.0 |

nbclient |

0.10.0 |

nbconvert |

7.16.3 |

nbconvert-core |

7.16.3 |

nbconvert-pandoc |

7.16.3 |

nbdime |

4.0.1 |

nbformat |

5.10.4 |

ncurses |

6.4.20240210 |

nest-asyncio |

1.6.0 |

networkx |

3.3 |

nodejs |

20.9.0 |

nomkl |

1 |

notebook |

7.1.3 |

notebook-shim |

0.2.4 |

nsight-compute |

2024.1.1.4 |

numba |

0.59.1 |

numexpr |

2.9.0 |

numpy |

1.26.4 |

nvidia-cublas-cu12 |

12.1.3.1 |

nvidia-cuda-cupti-cu12 |

12.1.105 |

nvidia-cuda-nvrtc-cu12 |

12.1.105 |

nvidia-cuda-runtime-cu12 |

12.1.105 |

nvidia-cudnn-cu12 |

9.1.0.70 |

nvidia-cufft-cu12 |

11.0.2.54 |

nvidia-curand-cu12 |

10.3.2.106 |

nvidia-cusolver-cu12 |

11.4.5.107 |

nvidia-cusparse-cu12 |

12.1.0.106 |

nvidia-nccl-cu12 |

2.20.5 |

nvidia-nvjitlink-cu12 |

12.6.68 |

nvidia-nvtx-cu12 |

12.1.105 |

oauthlib |

3.2.2 |

openblas |

0.3.27 |

openjpeg |

2.5.2 |

openpyxl |

3.1.2 |

openssl |

3.3.2 |

orc |

2.0.0 |

orjson |

3.10.7 |

overrides |

7.7.0 |

packaging |

24 |

pamela |

1.1.0 |

pandas |

2.2.2 |

pandoc |

3.1.13 |

pandocfilters |

1.5.0 |

parso |

0.8.4 |

partd |

1.4.1 |

patsy |

0.5.6 |

pexpect |

4.9.0 |

pickleshare |

0.7.5 |

pillow |

10.3.0 |

pip |

24 |

pkgutil-resolve-name |

1.3.10 |

platformdirs |

4.2.0 |

plotly |

5.24.1 |

pluggy |

1.5.0 |

preshed |

3.0.9 |

prometheus_client |

0.20.0 |

prompt-toolkit |

3.0.42 |

protobuf |

4.25.3 |

psutil |

5.9.8 |

pthread-stubs |

0.4 |

ptyprocess |

0.7.0 |

pure_eval |

0.2.2 |

py-cpuinfo |

9.0.0 |

pyarrow |

15.0.2 |

pyarrow-hotfix |

0.6 |

pybind11-abi |

4 |

pycosat |

0.6.6 |

pycparser |

2.22 |

pycurl |

7.45.3 |

pydantic |

2.9.2 |

pydantic-core |

2.23.4 |

pygments |

2.17.2 |

pyjwt |

2.8.0 |

pyopenssl |

24.0.0 |

pyparsing |

3.1.2 |

pysocks |

1.7.1 |

pytables |

3.9.2 |

python |

3.11.9 |

python-dateutil |

2.9.0 |

python-fastjsonschema |

2.19.1 |

python-json-logger |

2.0.7 |

python-tzdata |

2024.1 |

python_abi |

3.11 |

pytz |

2024.1 |

pywavelets |

1.4.1 |

pyyaml |

6.0.1 |

pyzmq |

26.0.2 |

rav1e |

0.6.6 |

rdma-core |

51 |

re2 |

2023.09.01 |

readline |

8.2 |

referencing |

0.34.0 |

regex |

2024.9.11 |

reproc |

14.2.4.post0 |

reproc-cpp |

14.2.4.post0 |

requests |

2.31.0 |

rfc3339-validator |

0.1.4 |

rfc3986-validator |

0.1.1 |

rich |

13.8.1 |

rpds-py |

0.18.0 |

ruamel.yaml |

0.18.6 |

ruamel.yaml.clib |

0.2.8 |

s2n |

1.4.12 |

safetensors |

0.4.5 |

scikit-image |

0.22.0 |

scikit-learn |

1.4.2 |

scipy |

1.13.0 |

seaborn |

0.13.2 |

seaborn-base |

0.13.2 |

send2trash |

1.8.3 |

setuptools |

69.5.1 |

shellingham |

1.5.4 |

simpervisor |

1.0.0 |

six |

1.16.0 |

smart-open |

7.0.4 |

smmap |

5.0.0 |

snappy |

1.2.0 |

sniffio |

1.3.1 |

sortedcontainers |

2.4.0 |

soupsieve |

2.5 |

spacy |

3.7.6 |

spacy-legacy |

3.0.12 |

spacy-loggers |

1.0.5 |

sqlalchemy |

2.0.29 |

srsly |

2.4.8 |

stack_data |

0.6.2 |

statsmodels |

0.14.1 |

svt-av1 |

2.0.0 |

sympy |

1.12 |

tblib |

3.0.0 |

tenacity |

8.5.0 |

terminado |

0.18.1 |

thinc |

8.2.5 |

threadpoolctl |

3.4.0 |

tifffile |

2024.4.18 |

tinycss2 |

1.2.1 |

tk |

8.6.13 |

tokenizers |

0.19.1 |

tomli |

2.0.1 |

tomlkit |

0.13.2 |

toolz |

0.12.1 |

torch |

2.4.1 |

torchaudio |

2.4.1 |

torchvision |

0.19.1 |

tornado |

6.4 |

tqdm |

4.66.2 |

traitlets |

5.14.3 |

transformers |

4.44.2 |

triton |

3.0.0 |

truststore |

0.8.0 |

typer |

0.12.5 |

types-python-dateutil |

2.9.0.20240316 |

typing-extensions |

4.11.0 |

typing_extensions |

4.11.0 |

typing_utils |

0.1.0 |

tzdata |

2024a |

ucx |

1.15.0 |

uri-template |

1.3.0 |

urllib3 |

2.2.1 |

wasabi |

1.1.3 |

wcwidth |

0.2.13 |

weasel |

0.4.1 |

webcolors |

1.13 |

webencodings |

0.5.1 |

websocket-client |

1.7.0 |

wheel |

0.43.0 |

widgetsnbextension |

4.0.10 |

wrapt |

1.16.0 |

xlrd |

2.0.1 |

xorg-libxau |

1.0.11 |

xorg-libxdmcp |

1.1.3 |

xyzservices |

2024.4.0 |

xz |

5.2.6 |

yaml |

0.2.5 |

yaml-cpp |

0.8.0 |

yarl |

1.11.1 |

zeromq |

4.3.5 |

zfp |

1.0.1 |

zict |

3.0.0 |

zipp |

3.17.0 |

zlib |

1.2.13 |

zlib-ng |

2.0.7 |

zstandard |

0.22.0 |

zstd |

1.5.5 |

Transferring variables in GPU kernel cells#

Because cells assigned a GPU kernel run in a separate environment from the rest of the notebook, variables must be transferred between the cell and the notebook to ensure they are accessible in both environments.

Follow these steps to transfer variables:

Click Variable transfer beside a cell with a GPU kernel assigned.

Enter the inputs and/or outputs to be transferred between the notebook and cell environments.

Click Set variables.

The inputs and/or outputs can now be used in the GPU kernel cell and notebook.

Managing usage#

When you assign a GPU kernel to a notebook, you are asked to reserve a defined number of minutes to use toward the notebook’s GPU execution time. Reserved time is calculated in credits, with one credit equaling 100 real-world seconds.

Once the notebook launches, the reserved time starts to count down. The reserved time continues to count down both when running code and when the notebook is idle.

Track how much reserved time is left by hovering over the top-right countdown bar:

Caution

When your reserved time drops below five minutes (300 seconds), the countdown bar turns red. Once the reserved time hits zero, the GPU kernel session automatically terminates.

Anaconda recommends conserving your GPU usage by either assigning a GPU kernel to individual cells that require higher compute power rather than the entire notebook, or waiting to assign the GPU kernel until you’re ready to run your code, and then stopping the GPU kernel session as soon as the code has executed.

There are multiple ways you can stop a GPU kernel session:

Select Kernels from the top menu bar.

Select Shut Down All to shut down all kernels.

In the confirmation dialog, click Shut Down All.

In a notebook with an assigned GPU kernel, click the top-right countdown bar.

On the Jupyter Kernels page, click Delete next to the kernel you’d like to shut down.

Usage history#

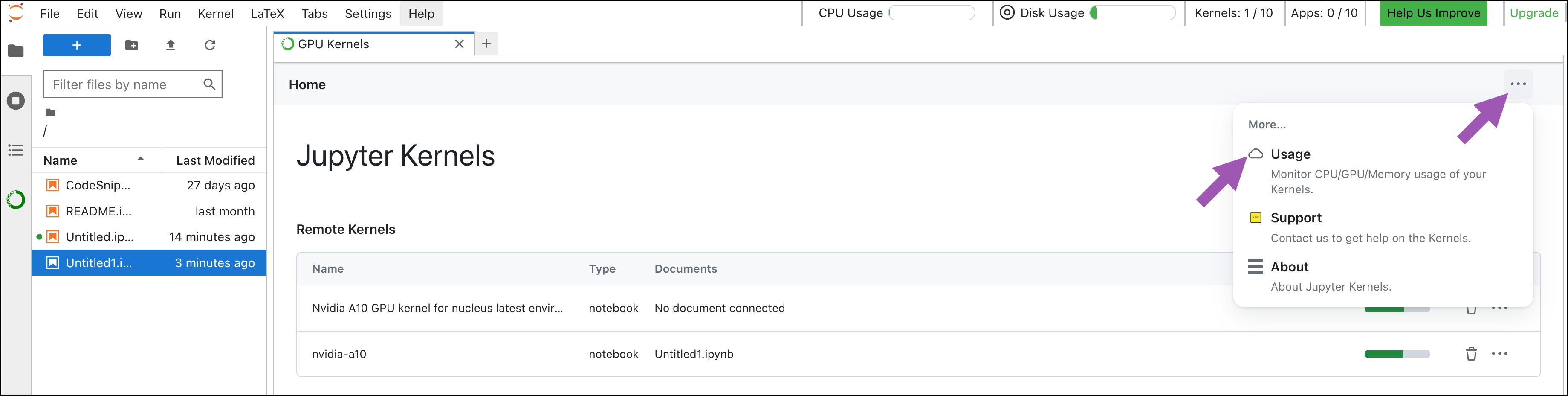

View your GPU usage stats and remaining credits by clicking the top-right actions dropdown on the Jupyter Kernels home page, then selecting Usage:

The Overview page shows your running GPU kernels, remaining credits, and historical credit consumption.

The History page shows your usage history, including GPU session kernel names, types, start date, end date, credits used, and session duration.

The Reservations page shows any currently running GPU kernels, the credits assigned to the reservation session, and the reservation start and end times.

Using credits#

Anaconda offers credits to access GPU compute time. One credit equals 100 real-world seconds. Note that this is different from how high-compute seconds are calculated for CPU use.

Credits are automatically deducted as reserved time is used. For example, if you use 10 minutes of reserved time, 6.00 credits will be deducted from your account.

View your remaining credits on the Usage Overview page.

At this time, Anaconda is offering 500 free credits to select paid subscribers only. See note for details on upgrading your subscription to gain access.

Troubleshooting#

I ran out of credits#

To request additional GPU credits, file a support ticket.

The GPU kernel does not start up#

Reload the Anaconda Notebooks page and then click “Launch a Remote Kernel” from the Launcher. If you’re still unable to run a GPU kernel, file a support ticket.

My code isn’t running#

Check for missing import statements and verify that variables were correctly transferred to the GPU kernel.